XFS vs ZFS: Choosing the Right Filesystem for Your Workload

The core difference between XFS and ZFS boils down to a fundamental trade-off: speed versus safety. XFS is a lean, mean, single-host journaling filesystem built for raw performance and massive scalability. ZFS, on the other hand, is an integrated volume manager and filesystem engineered for absolute data integrity and a powerful set of built-in features. The filesystem you choose will shape everything from raw performance and data protection to your day-to-day admin tasks. This is a critical decision for any system administrator or DevOps engineer, as each one represents a completely different philosophy on how storage should be managed.

Ready to optimize your server costs without complex scripts? Server Scheduler can help you automate start/stop schedules for AWS resources and cut bills by up to 70%.

This choice isn't just about technical specs; it’s a strategic move that affects reliability, cost, and complexity down the line. Getting it right is a fundamental part of what is cloud cost optimization, as the wrong filesystem can lead to expensive data recovery or performance headaches. We'll dig into their architectural quirks, real-world performance, and management overhead to help you pick the right tool for the job.

Contents

Ready to Slash Your AWS Costs?

Stop paying for idle resources. Server Scheduler automatically turns off your non-production servers when you're not using them.

Understanding Core Architectural Differences

To really get to the bottom of the XFS vs. ZFS debate, we have to start with how they were built from the ground up. Their core designs are what define their strengths, weaknesses, and ultimately where they fit best. XFS is a classic, highly-tuned journaling filesystem that sits right on top of a block device—it’s all about raw speed and efficiency. ZFS, on the other hand, is a completely different beast; it bundles the volume manager and the filesystem together, with an obsessive focus on data integrity. This architectural split is the single most important thing to understand. Picking the right one isn't about ticking off features on a list. It's about figuring out which design philosophy lines up with your workloads and how much risk you're willing to take.

XFS was built for one thing: performance. It pulls this off by being a lean, 64-bit journaling filesystem that talks directly to the storage hardware underneath, whether that's a single disk or a fancy hardware RAID array. Its secret sauce is extent-based allocation. Instead of keeping track of every single block a file uses, XFS groups adjacent blocks into "extents." This slashes the metadata overhead, making file allocation and access incredibly fast, especially for the massive, sequential files you see in media streaming or scientific computing. XFS is like a high-performance race car engine. It's meticulously engineered for speed and efficiency, but it expects the rest of the car—the chassis, brakes, and safety systems (your hardware RAID controller)—to handle reliability and protection.

ZFS flips the script entirely by merging the filesystem and volume manager into one cohesive unit. It doesn't just format a partition someone else created; it takes direct control of physical disks and organizes them into a storage pool, called a zpool. This all-in-one design is what enables its legendary data protection features. The foundation of ZFS is its copy-on-write (CoW) transactional model. When data is modified, ZFS never overwrites the original block. Instead, it writes the new data to a completely new block and then atomically updates the metadata to point to the new location. This simple but powerful method means the filesystem is always in a consistent state, pretty much eliminating the risk of corruption from a sudden power outage during a write.

Callout: For DevOps and FinOps teams, this architectural split has a direct line to operational overhead and your cloud bill. An XFS setup might scream on raw AWS EBS performance, but you're still on the hook for configuring and managing RAID at another layer. ZFS on EC2 consolidates everything, but its higher resource usage, especially RAM, can impact infrastructure costs.

Analyzing Data Integrity and Protection Mechanisms

When you get right down to it, the debate over data integrity is often the single biggest reason teams choose one filesystem over the other. ZFS is built with an almost obsessive focus on preventing data loss and silent corruption from top to bottom. XFS, on the other hand, delivers rock-solid, high-performance reliability but essentially trusts the underlying hardware to handle block-level data integrity. Getting this distinction is everything. It determines whether you want a filesystem that actively polices your data or one that trusts the rest of your storage stack to do its job.

broader IT security considerations and offer real peace of mind, though a solid backup strategy as detailed in our guide on backing up Linux systems is still essential.

broader IT security considerations and offer real peace of mind, though a solid backup strategy as detailed in our guide on backing up Linux systems is still essential.

Comparing Performance Profiles and Scalability

Performance is where the architectural theory of XFS and ZFS hits the road. Their underlying designs create distinct advantages that show up loud and clear depending on the kind of workload you throw at them. XFS is built for pure, unadulterated speed in specific scenarios, while ZFS trades some of that raw power for intelligent caching and the overhead that comes with robust data protection. When it comes to high-throughput, sequential operations, XFS is the undisputed champ. Its design minimizes CPU overhead and handles metadata with incredible efficiency, making it exceptionally fast for workloads dealing with very large files. This makes XFS a natural fit for media streaming servers, scientific computing, and certain database backends where large, continuous data streams are the norm.

ZFS takes a completely different angle on performance. Its raw throughput can lag behind XFS because of the overhead from its copy-on-write model and constant data checksumming. But what it lacks in top-end speed, it often more than makes up for with its incredibly smart caching system. ZFS uses the Adaptive Replacement Cache (ARC), a sophisticated caching mechanism that lives in RAM and intelligently keeps both frequently and recently accessed data ready to go. For workloads with a chaotic mix of random reads and writes—think virtualization hosts or busy file servers—the ARC can deliver phenomenal performance.

Both filesystems scale to absolutely enormous sizes, but their approaches are fundamentally different. XFS scales vertically, allowing a single filesystem to grow to an incredible capacity (up to 500TB tested), making it perfect for creating one single, vast storage volume. This often requires external tools for management, as detailed in our guide on how to expand a partition in Ubuntu. ZFS, on the other hand, scales horizontally through its pooled storage model. A zpool is built from many smaller virtual devices (vdevs), and you can easily add more vdevs to the pool to grow both capacity and performance. This design makes it simple to start with a modest storage setup and grow it incrementally over time without planning complex migrations.

| Feature | XFS | ZFS |

|---|---|---|

| Best Workload | High-throughput, sequential I/O (e.g., large media files) | Mixed, random I/O (e.g., virtualization, databases, file servers) |

| Key Performance Feature | Low CPU overhead, efficient metadata handling | Adaptive Replacement Cache (ARC) and optional L2ARC SSD caching |

| Scalability Model | Vertical: Scales a single filesystem to huge sizes | Horizontal: Scales by adding new virtual devices (vdevs) to a pool |

| Growth Management | Requires external tools to grow an existing filesystem | Simple to add new vdevs to expand an existing pool without downtime |

Administration, Snapshots, and Replication

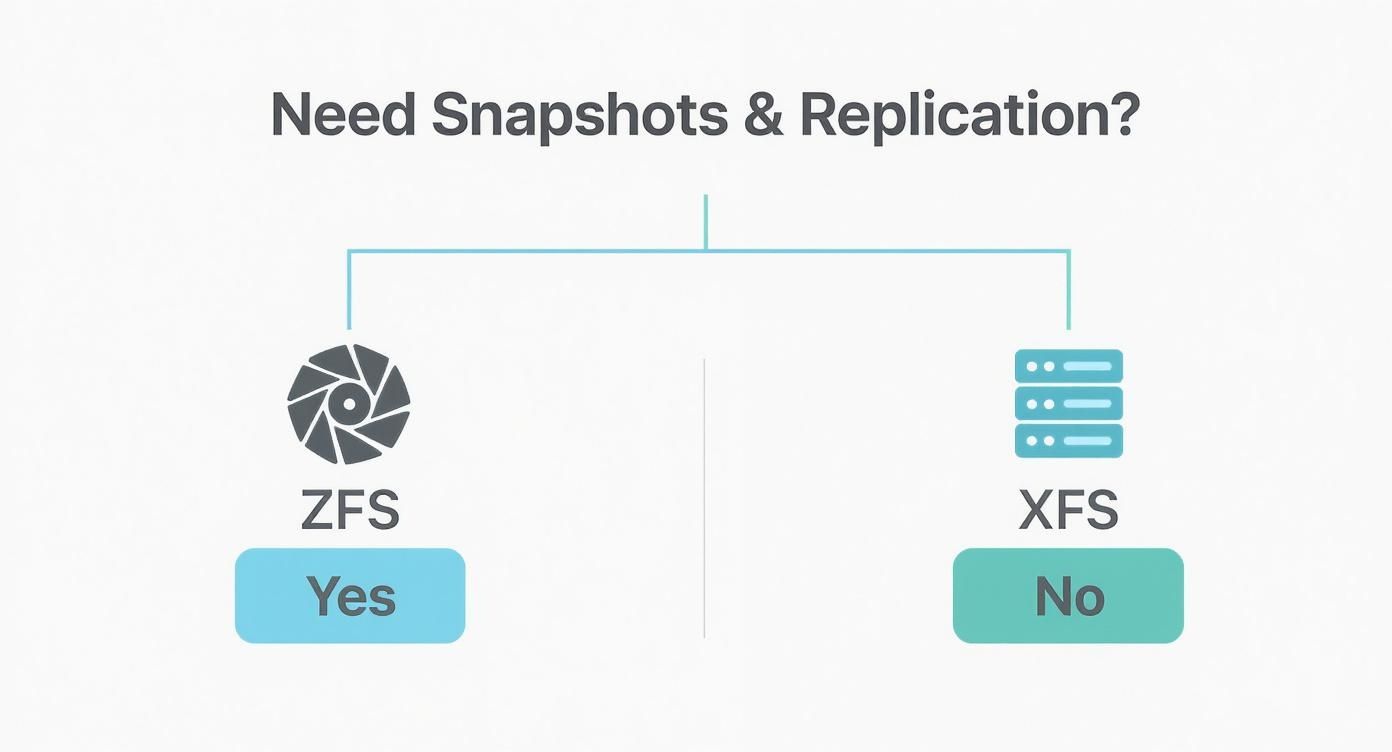

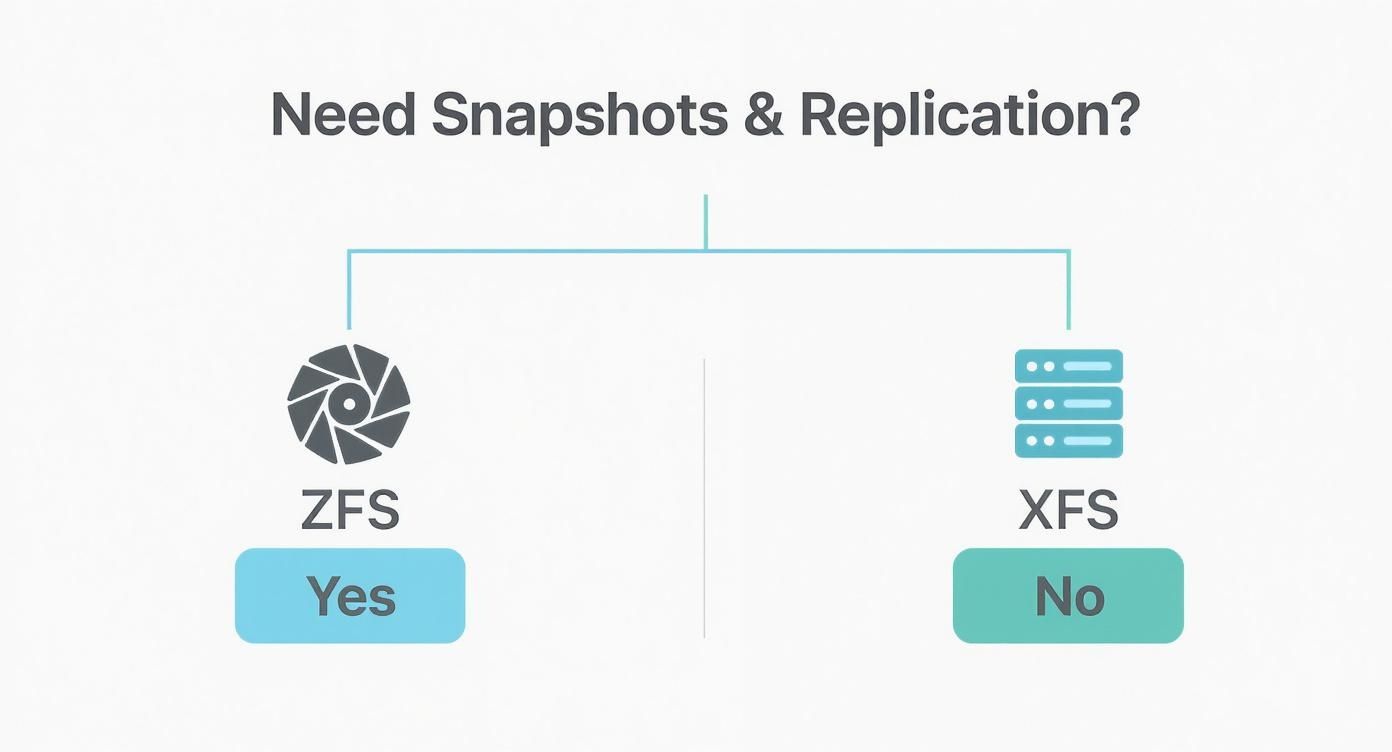

Beyond raw performance, the day-to-day administrative experience is often what makes or breaks a filesystem choice. ZFS offers a beautifully integrated suite of tools for advanced storage management, while XFS sticks to a more traditional, layered approach that’s mature but requires more assembly. ZFS truly shines here, known for its administrative simplicity and powerful, built-in features that just work out of the box. You primarily interact with it through two commands: zpool for storage pools and zfs for datasets. The two killer features are its lightweight, instantaneous snapshots and its native replication capabilities. Firing off a zfs snapshot is an atomic, near-instant operation, and zfs send/receive commands allow for efficient, block-level replication to another system—a huge win for DevOps automation.

database replication strategies.

database replication strategies.

Practical Use Cases and Final Recommendations

Deciding between XFS and ZFS boils down to a single question: what’s more important for your workload, raw speed or bulletproof data integrity? There’s no silver bullet here. The right filesystem depends entirely on your specific needs and how much risk you’re willing to accept. XFS is an absolute beast for high-throughput, sequential I/O where you have a solid hardware RAID controller handling data protection. It shines in large-scale media storage, high-performance computing (HPC), and specific database workloads that perform large, sequential writes. In short, pick XFS when performance is measured in gigabytes per second and you trust your hardware.

When you absolutely cannot afford to lose or corrupt data, ZFS is the only real choice. It’s a complete storage platform that actively protects against silent data corruption, making administration simpler and your data safer. ZFS is the go-to for enterprise file servers, virtualization hosts, and archival and backup storage. In a cloud environment like AWS, this choice has real FinOps implications. Pairing XFS with an EBS volume is fast and straightforward, but running ZFS on an EC2 instance gives you total control over integrity and replication, which can simplify the backup strategy for anyone using an EC2 instance scheduler to manage costs.

Frequently Asked Questions About XFS and ZFS

A direct, in-place conversion from XFS to ZFS just isn't possible because their underlying architectures are fundamentally different. The only way to migrate is the old-fashioned way: back up all data from your XFS volume, create a brand new ZFS pool on the hardware, and then restore your data onto the new pool.

While ZFS doesn't technically require ECC RAM to function, it is very strongly recommended. ZFS is built for data integrity, which means it implicitly trusts that the data it receives from memory is correct. ECC (Error-Correcting Code) RAM is the hardware-level defense against memory corruption. Using non-ECC RAM with ZFS creates a potential weak link in the chain of data integrity. If you're serious about protecting your data, ECC RAM is a must-have.

Take Control of Your Cloud Costs Ready to stop overspending on idle cloud resources? With Server Scheduler, you can visually define start/stop schedules for AWS resources and cut cloud bills by up to 70%—no scripts needed. Get started with Server Scheduler today!